March 7, 2024 | AI and NLP

What One Year of ChatGPT has Taught Me About the Future of Quality Measurement – Part 2. Moving Beyond the Hype

If you had a chance to read Part 1 of this blog last week you know that I am extremely optimistic about the value of generative AI to healthcare quality measurement. In Part 2 of this blog, I am going to give you a sense of how these technologies work as well as what speed bumps lie ahead of us. I’ll also explain why our field of healthcare quality measurement is so perfect for this new technology. Finally, I’ll also discuss a question that is on everyone’s mind – when this transition occurs, how will it impact our workforce and organizations?

Rebecca Jacobson, MD, MS, FACMI

Co-Founder, CEO, and President

What are These Large Language Models and How Do You Use Them?

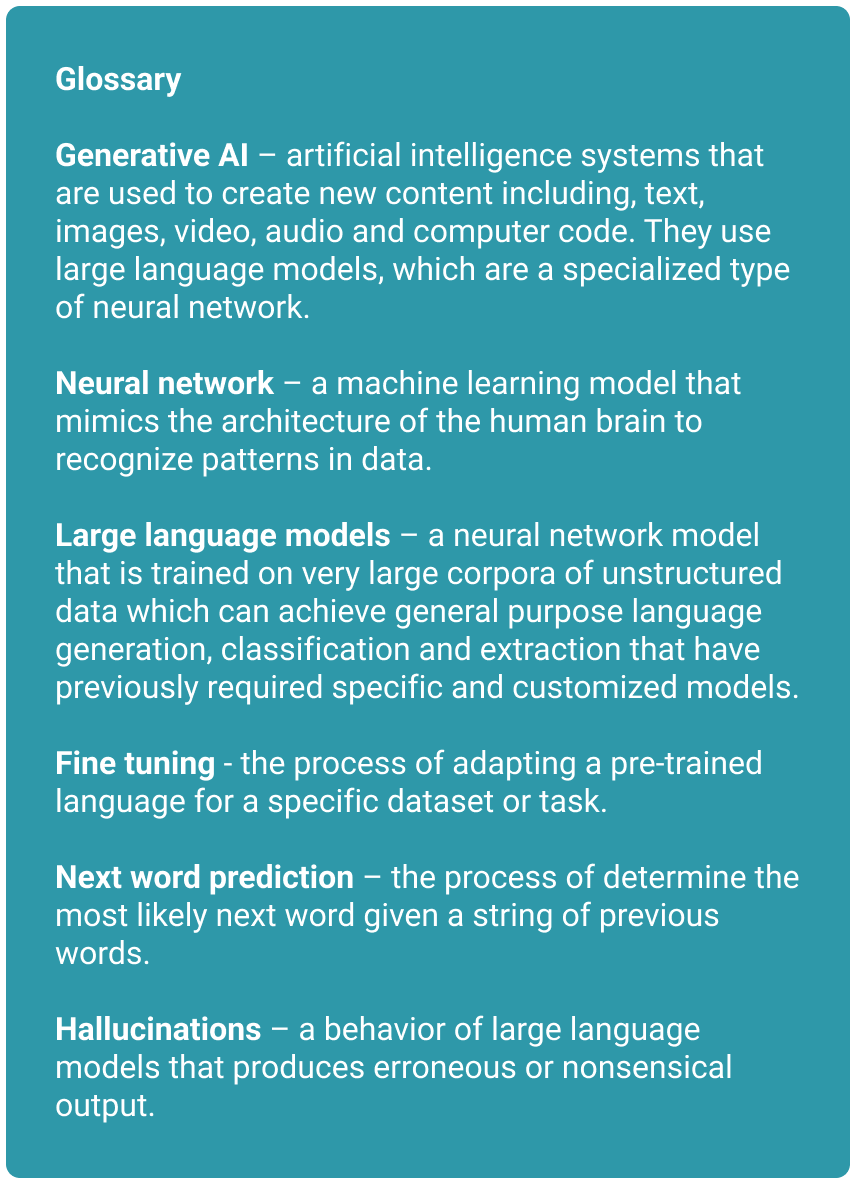

Many people tend to shy away from the technical details of how these models work, but I encourage you to keep reading. My goal is to give you a framework to understand this technology, so that you have a sense of what it can do well, and not so well, as well as some of the challenges we may encounter when using it in healthcare settings. If you encounter unfamiliar terms, check out the glossary below for definitions.

Training the Model

Before you can use a generative AI model, you must first train it. Training a large language model (which is a specific kind of neural network) is a costly endeavor that takes many days or weeks, lots of computer time, and a huge amount of data (usually some significant part of the Internet). Most startups, digital health companies, and healthcare organizations will never do this training step by themselves. Today, training a large language model could cost you millions of dollars. This training creates a base model, which is then refined in a second step with additional data including human-labeled data (called fine-tuning). The base model can be thought of as a kind of lossy, compressed version of the huge corpus of data used in the training. The model preserves the gestalt of what’s going on without retaining all the details.

Using the Model

In contrast, using a large language model for a variety of different tasks is relatively fast and inexpensive. This is something that startups, digital health companies, and healthcare organizations can do on their own to accomplish work. And these models generalize to different types of work, with very strong performance. For the purpose of generating text and answering questions, the basic mechanism that LLMs use is one of next-word prediction. The model starts with a word and then uses the gestalt compressed representation of this large corpus of data it was trained on to predict what the next word should be. The system just keeps going until you generate the entire document/answer or get to some other endpoint.

Extracting HEDIS® Inclusion and Exclusion Criteria Using Large Language Models

One task that these systems can be very, very good at is extracting information from electronic health records. For example, a large language model (LLM) can be used to extract HEDIS® information from free text including the specific interventions that close a gap as well as the dates when they occurred. While there are a few ways to increase accuracy, one interesting aspect of these models is that their accuracy is very smoothly correlated with the size of the model. If you want to extract HEDIS® information more accurately – use a bigger model.

Open-Source and Proprietary (Closed-Source) Models

Since most of us won’t ever train our own large language models, the use of this technology for specific tasks usually either requires the use of a third-party provider with a closed-source model or the use of an open-source model that you host and work with yourself. Closed-source models are proprietary and privately trained. The third party that created the model sells access to it. But users cannot access the source code or model weights and they often don’t know much about the data used to train the model. ChatGPT is an example of a proprietary, closed-source model offered by a third-party provider – OpenAI. In contrast, open-source models can be used freely and provide access to the model source code and often to the model weights. They provide more transparency about what data is used to train the model, and they often encourage community contribution. Mistral is an example of an open-source model, developed by Mistral AI.

What Could Hold Us Back?

There are a number of challenges to using large language models in general. Let’s start with those before I explain why I think these are conquerable challenges if our goal is to use this technology to enhance healthcare quality measurement.

1. Intellectual Property Rights

One emerging problem for large language models has been the data that is used to train them. Questions remain about whether third-party model providers even have the right to use all the data they are currently using to train the model, or whether this is a violation of copyright law. For example, the New York Times and other publishers are currently suing OpenAI because of their use of copyrighted data. It is likely that the closed-source models will have much bigger problems with intellectual property, in part because of the lack of transparency about what data they use.

2. Security and Privacy Concerns

Many healthcare organizations are deeply concerned about the use of large language models because of the centralization of third-party providers to a small number of relatively new startup companies without a long history of privacy and security efforts. Furthermore, certain adversarial techniques seem to produce retrieval of specific data that has been “memorized” by the model, which has potentially important implications for the use of protected health information (PHI) in training models. We clearly don’t want it to be possible for a model trained on electronic health records to regurgitate patient medical records! Many of these third-party providers will now sign a Business Associates Agreement (BAA) but this does not really address the underlying problem. Regulatory oversight is needed. New organizations such as the Coalition for Health AI (CHAI) are forming now and may help us to better understand and control the balance of benefits and risks. However, until there is more clarity, healthcare organizations are likely to be wary of using third-party providers.

3. Hallucinations and error tolerance

A third major problem for large language models is that the nature of their output is very non-deterministic. You may have encountered this if you’ve used any of the available tools such as ChatGPT. The system can answer questions or produce output that seems generally quite good. But occasionally it comes back with something that is completely wrong, and it’s often very difficult to determine that it’s completely wrong because the error fits so well within the context of the larger answer. This kind of behavior is called a “hallucination” and it was an immediate cause for concern when these models were first released. While it appears that hallucinations have declined in some of the closed-source models over the last year, many researchers think that it will be nearly impossible to eliminate them in the future. In fact, this behavior might be inherent to these types of models. Therefore, we need to think about what use cases have the appropriate profile of error tolerance when we plan to use this technology.

The Way Forward

While the three issues above are extremely important, I think we will be able to use this technology very effectively in healthcare quality measurement almost immediately. Let me explain why.

First, I am encouraged by the growth of the open-source large language models, because I think these will be a much better alternative for healthcare usage. Open-source models can be deployed locally and used with more control and potentially better and more customized privacy and security protections. Open-source models are also less likely to be associated with the IP challenges of some of the third-party providers.

Second, the tolerance for occasional errors (including hallucinations) when using this technology for administrative tasks such as quality measurement is higher than for more direct clinical tasks such as clinical decision support. Even if hallucinations are baked into the technology, in our experience the degree of impact on accuracy is low for extracting quality measure information. And as I suggested in Part 1, we know there is always a clinician between a gap and a patient. Quality measurement has exactly the right error tolerance profile for this technology at the current time.

What Does the Future Look Like?

I sense that pretty big changes are around the corner as we deploy these new models. I know that’s the case here at Astrata! What can we expect in the future?

I think in the near future (next 1-2 years) we can expect automated abstraction performance that is as good or better than human abstractors and that will be able to scale to very large populations. I think we are going to need to ask ourselves whether we want to continue to perform so much manual review of patient charts, both among providers and payers. Or whether the process of converting unstructured chart data to structured quality data should be primarily done by automated systems. This is a question that regulators and measure stewards like NCQA and NQF should consider and where they can provide critical guidance.

Thinking even more disruptively, I question whether these models might end up being a better alternative to our current thinking on interoperability. What if we could simply extract the information from an electronic PDF emitted from an EHR instead of needing to map everything to a common model like FHIR. I know that’s informatics heresy, but given the performance of these models, I wonder whether this might end up being an easier way to get to where we want to go.

Thinking even more disruptively, I question whether these models might end up being a better alternative to our current thinking on interoperability. What if we could simply extract the information from an electronic PDF emitted from an EHR instead of needing to map everything to a common model like FHIR. I know that’s informatics heresy, but given the performance of these models, I wonder whether this might end up being an easier way to get to where we want to go.

Of course, all of this could have substantial impacts on our quality workforce. As we start to automate more and more quality measurement, I think organizations need to think hard about what the future workforce looks like. Perhaps redeploying our medical record reviewers (who are also typically clinicians and quality geeks) to quality improvement activities will provide a more meaningful impact on population health.

So much is changing. Astrata wants to be there with you, from taking the very first steps to delivering on your digital transformation. We hope you will be in touch, follow our LinkedIn posts, subscribe to our newsletter, attend webinars, and keep up with our Chalk Talks. Together, we are poised for a radical transformation with a broad impact on the future of value-based care.